Global Multi-Sensory AI Market Size, Share, Industry Analysis Report By Technology (Natural Language Processing (NLP), Computer Vision, Speech Recognition, Machine Learning & Deep Learning, Sensor Fusion), By Deployment Mode (Cloud-Based, Edge Computing, On-Premises), By Application (Healthcare, Automotive, Retail, Entertainment, Smart Homes, Others), By End User (Consumer Electronics, Enterprises, Healthcare Providers, Automotive Manufacturers, Retailers, Others), By Region and Companies - Industry Segment Outlook, Market Assessment, Competition Scenario, Trends and Forecast 2025-2034

- Published date: Nov. 2025

- Report ID: 163204

- Number of Pages: 284

- Format:

-

keyboard_arrow_up

Quick Navigation

Report Overview

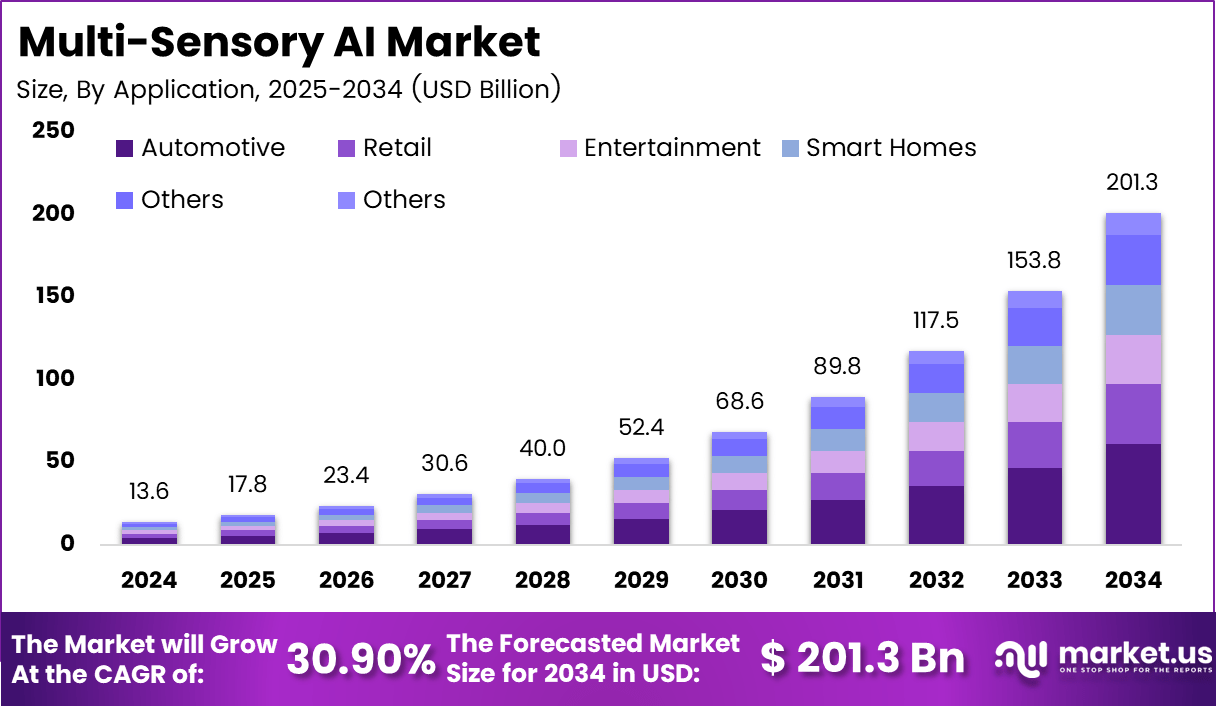

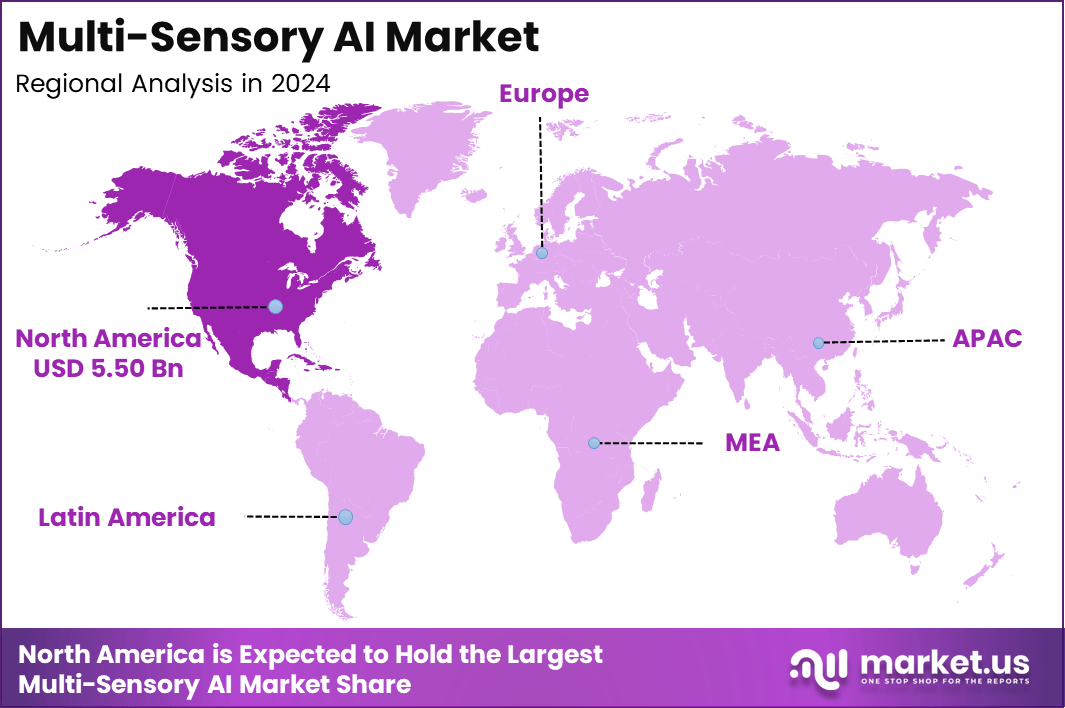

The Global Multi-sensory AI Market generated USD 13.6 billion in 2024 and is predicted to register growth from USD 17.8 billion in 2025 to about USD 201.3 billion by 2034, recording a CAGR of 30.9% throughout the forecast span. In 2024, North America held a dominan market position, capturing more than a 40.4% share, holding USD 5.50 Billion revenue.

The multi-sensory AI market refers to technologies and systems that integrate multiple human-like sensory modalities (such as vision, hearing, touch, sensor fusion) to enable machines to perceive, interpret and act in real-world environments in a more human-comparable way. These systems are applied in robotics, autonomous vehicles, healthcare monitoring, smart manufacturing, and immersive consumer electronics.

Top driving factors for this market include rapid advancements in machine learning and deep learning algorithms that enhance perception and decision-making capabilities. Industrial automation benefits greatly from multi-sensory AI, enabling robots to handle complex tasks by processing visual, auditory, and tactile data together.

Another significant growth driver is autonomous vehicle development that relies on combining sensors like LiDAR, radar, cameras, and microphones for real-time navigation and safety decisions. In healthcare, AI-powered wearable devices that monitor vital signs in real time are increasing adoption due to their ability to reduce hospital visits and improve patient outcomes. The accelerating digital transformation across industries creates a fertile environment for multi-sensory AI expansion.

Key Takeaways

- Computer Vision technology dominated with 36.2%, driven by its expanding role in object detection, gesture recognition, and environmental perception across industries.

- The Cloud-Based deployment mode accounted for 50.8%, supported by scalable infrastructure that enables real-time data fusion from multiple sensors.

- The Automotive application segment led with 30.3%, reflecting the growing use of multi-sensory AI in autonomous driving, safety systems, and in-vehicle experience optimization.

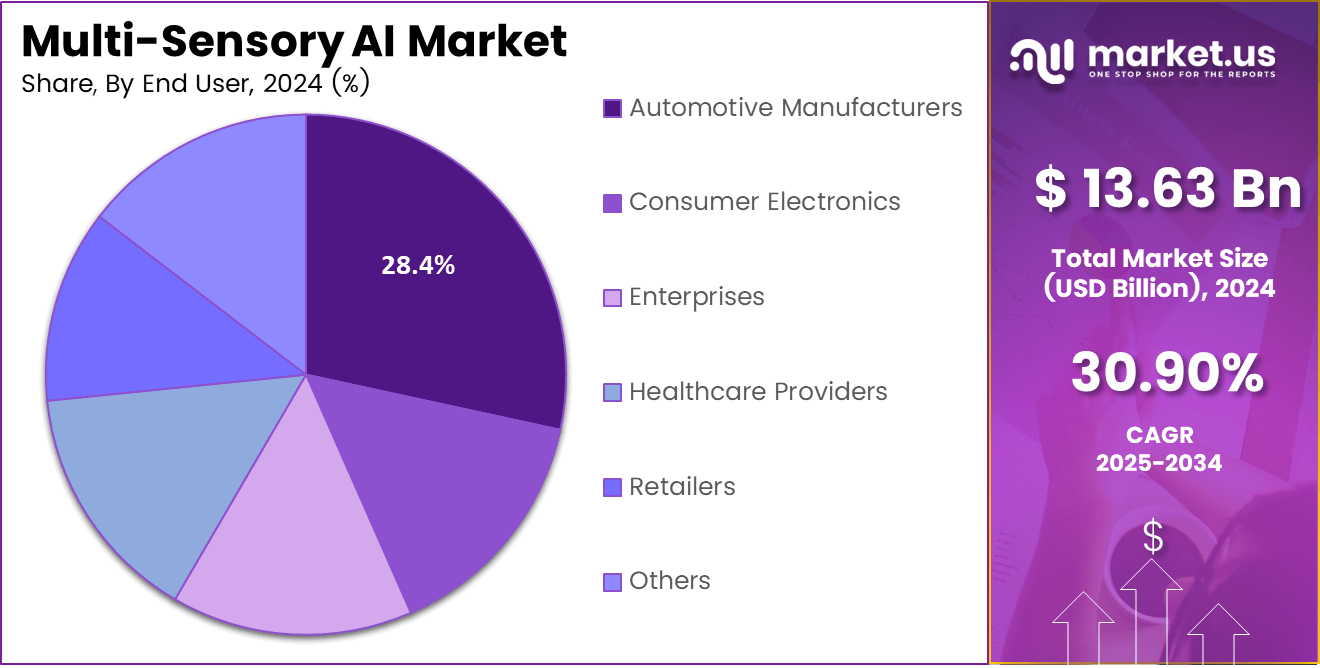

- Automotive Manufacturers represented 28.4% of end-user adoption, underscoring their focus on integrating AI-driven sensory intelligence to enhance operational precision and driver assistance systems.

- North America captured 40.4% of the global market, supported by advanced R&D in AI, sensor integration, and vehicle automation technologies.

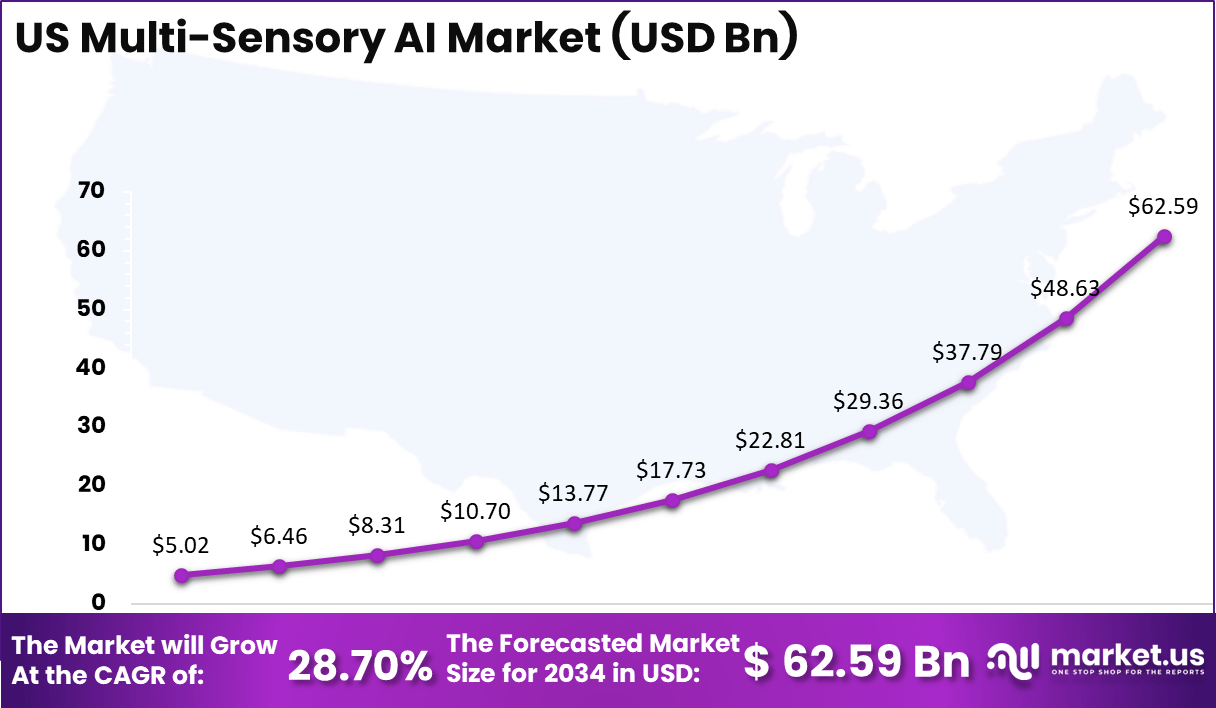

- The US market reached USD 5.02 Billion in 2024, growing at a strong 28.7% CAGR, fueled by rapid innovation in multi-modal AI, sensor fusion frameworks, and adoption in smart mobility ecosystems.

US Market Size

The US Multi-Sensory AI Market is valued at USD 5.02 billion in 2024 and projected to reach USD 62.59 billion by 2034, growing at a strong CAGR of 28.7%. The growth is fueled by increasing adoption of AI-driven sensor fusion technologies across automotive, healthcare, defense, and consumer electronics sectors, enhancing intelligent perception and automation capabilities nationwide.

Multi-sensory AI represents the next phase of intelligent automation, where systems can process visual, auditory, and tactile information simultaneously to interpret real-world scenarios more accurately. This convergence of sensing technologies with machine learning is enabling breakthroughs in autonomous driving, human–machine interaction, and adaptive smart environments.

North America dominates the global multi-sensory AI market, valued at USD 5.50 billion in 2024, supported by strong technological infrastructure, advanced AI research hubs, and wide adoption across industries such as automotive, healthcare, and consumer electronics. The region’s dominance is supported by strong investments in autonomous systems, robotics, and immersive technologies, along with government initiatives promoting AI integration in manufacturing and defense.

North America leads the multi-sensory AI market, followed by Europe’s strong regulatory framework and automation initiatives. Asia-Pacific shows the fastest growth, driven by massive investments in AI devices and sensor production in China, Japan, and South Korea. Latin America and MEA are emerging regions with rising adoption in smart cities and healthcare, collectively shaping the global expansion of multi-sensory AI technologies.

By Technology

Computer vision is a leading technology in the multi-sensory AI market, holding a share of 36.2%. This technology enables AI systems to process and interpret visual data, making it essential for applications that require real-time scene understanding and object recognition. Its widespread use in areas like autonomous vehicles, industrial automation, and security systems highlights its importance in modern AI-driven solutions.

Recent trends show that over 54% of global companies have adopted AI-powered analytics platforms that leverage computer vision for enhanced operational efficiency. The integration of deep learning and edge computing is further accelerating adoption, allowing for real-time visual processing and improved decision-making in complex environments.

By Deployment Mode

Cloud-based deployment dominates the multi-sensory AI market with 50.8% share. This reflects the growing preference for AI solutions hosted on remote servers, offering flexible scalability, lower infrastructure costs, and easier access to advanced analytics. Cloud platforms support the processing of vast sensory data by providing powerful computing resources and centralized AI model management.

Many businesses benefit from cloud-based AI as it facilitates collaboration, rapid updates, and integration across wide user bases without heavy on-site hardware investment. The cloud also supports integration with Internet of Things (IoT) devices, enabling multi-sensory AI to deliver real-time insights across industries like automotive and healthcare more efficiently.

By Application

The automotive segment accounts for 30.3% of the multi-sensory AI market, making it one of the leading areas for adoption. AI’s sensory capabilities are transforming vehicles through advanced driver-assistance systems (ADAS), autonomous driving technologies, and enhanced safety features.

Multi-sensory AI enables vehicles to gather and interpret data from visual, audio, and motion sensors to better understand traffic, obstacles, and driver behavior. This high adoption rate relates to automotive manufacturers’ strong focus on smart, connected vehicles to improve safety and user experience. The need for real-time processing of complex sensory inputs in vehicles makes multi-sensory AI a crucial technology in this sector.

By End User

Automotive manufacturers are the main end-users of multi-sensory AI, representing 28.4% of the market. These manufacturers invest in AI technologies to enhance vehicle intelligence, developing systems that improve safety, navigation, and driver comfort. Multi-sensory AI helps manufacturers innovate in areas such as predictive maintenance, in-car user interactions, and autonomous driving features.

The large share signals the automotive industry’s commitment to embedding sensory AI deeply within vehicle ecosystems to stay competitive. These investments are also driven by regulatory pressures for safer vehicles and consumer demand for smarter driving experiences.

Key Market Segment

By Technology

- Natural Language Processing (NLP)

- Computer Vision

- Speech Recognition

- Machine Learning & Deep Learning

- Sensor Fusion

By Deployment Mode

- Cloud-Based

- Edge Computing

- On-Premises

By Application

- Healthcare

- Automotive

- Retail

- Entertainment

- Smart Homes

- Others

By End User

- Consumer Electronics

- Enterprises

- Healthcare Providers

- Automotive Manufacturers

- Retailers

- Others

Key Regions and Countries

- North America

- US

- Canada

- Europe

- Germany

- France

- The UK

- Spain

- Italy

- Rest of Europe

- Asia Pacific

- China

- Japan

- South Korea

- India

- Australia

- Singapore

- Rest of Asia Pacific

- Latin America

- Brazil

- Mexico

- Rest of Latin America

- Middle East & Africa

- South Africa

- Saudi Arabia

- UAE

- Rest of MEA

Driver Analysis

The rising use of robots worldwide is a major driver for multi-sensory AI market growth. Robots equipped with multi-sensory AI can process data from vision, touch, and sound, enabling them to better understand and interact with their environment. For instance, sales of professional service robots grew by 30% in 2023 compared to the previous year.

This improvement boosts robots’ abilities to navigate spaces, handle objects precisely, and communicate effectively with humans, which encourages companies to invest in these advanced robotic systems. Furthermore, the expansion of human-robot collaboration increases the need for robots that can interpret speech and physical cues, enhancing efficiency in workplaces.

Restraint Analysis

A key restraint is the limited knowledge about human sensory processing, which presents challenges to replicating this in AI systems. Human senses operate in complex ways influenced by context, emotions, and individual differences. AI models still struggle to effectively combine multiple sensory inputs in a way that mimics true human perception. This makes building reliable, universally effective multi-sensory AI difficult.

About 30% of businesses face significant hurdles in managing sensory data complexity, which slows product development and deployment. Bridging this gap requires integrating neuroscience with AI research to better interpret sensory signals. Until these challenges are addressed, multi-sensory AI growth may remain restrained due to uncertainties about sensory accuracy and user experience reliability.

Opportunity Analysis

Wearable devices with AI sensors offer a strong opportunity for multi-sensory AI expansion. Consumers increasingly want health and fitness monitors that provide real-time, multi-sensory data like heart rate, movement, and temperature. AI sensors help deliver personalized insights and improved user experiences in smartwatches and hearables.

The global market for AI-enabled wearables is expanding rapidly, driven by demand in healthcare and fitness applications. Healthcare providers use these devices to monitor patient vitals remotely, improving early diagnosis and care personalization. The trend toward AI-driven wearables is expected to continue accelerating, with billions invested in sensor innovation and integration to meet evolving customer needs.

Challenge Analysis

Multi-sensory AI systems face the challenge of handling vast amounts of diverse sensory data simultaneously. Combining inputs from cameras, microphones, touch sensors, and others demands significant computational power to process accurately and in real-time. Synchronizing these inputs is critical to avoid errors and provide a coherent understanding of environments.

This complexity raises the bar for hardware design and AI algorithms, which must be efficient to work within cost and power constraints. As a result, companies struggle to develop practical multi-sensory AI systems that perform well outside lab settings. Overcoming this requires innovation in edge computing, sensor fusion techniques, and software optimization to support real-world applications effectively.

Emerging Trends

Emerging trends in multi-sensory AI show a strong move toward blending multiple senses such as sight, sound, and touch to create more human-like understanding. Systems can now take in various data types simultaneously, including text, images, and audio, allowing AI to provide richer, context-aware responses.

For instance, over 44% of AI advancements in 2025 focus on integrating more than one modality, improving applications across healthcare, retail, and manufacturing. This multi-sensory approach enables systems to perform tasks like generating captions for videos or summarizing information from mixed media inputs more accurately than before.

This integration is not just about technology but enhancing real-world experiences too. Healthcare applications increasingly use multi-sensory AI to combine speech recognition, visual data, and sensor inputs to provide timely, personalized patient monitoring. Over 55% of new AI systems deployed in enterprises include multi-sensory capabilities to improve human-machine interaction.

Growth Factors

Growth factors behind this rise in multi-sensory AI include advances in machine learning, deep learning, and sensor fusion technologies, which allow efficient processing of complex sensory data. In 2024, these technologies made up the largest segment of multi-sensory AI techniques, demonstrating their importance in enhancing AI’s perceptual and reasoning skills.

Additionally, the surge in IoT devices feeds a continuous stream of sensory data, driving about 45% yearly growth in this specialized AI sector. Improved cloud infrastructure and real-time data processing have also lowered barriers for deploying these solutions in multiple industries.

The expanding use cases across sectors further fuel multi-sensory AI’s growth. Healthcare leads with AI-powered imaging, wearable health monitors, and robotic assistants, which together are improving patient care while cutting costs. More than half of AI investments in enterprise healthcare involve multi-sensory AI technologies like speech recognition and sensor fusion.

Growth Factors

Growth factors behind this rise in multi-sensory AI include advances in machine learning, deep learning, and sensor fusion technologies, which allow efficient processing of complex sensory data. In 2024, these technologies made up the largest segment of multi-sensory AI techniques, demonstrating their importance in enhancing AI’s perceptual and reasoning skills.

Additionally, the surge in IoT devices feeds a continuous stream of sensory data, driving about 45% yearly growth in this specialized AI sector. Improved cloud infrastructure and real-time data processing have also lowered barriers for deploying these solutions in multiple industries. The expanding use cases across sectors further fuel multi-sensory AI’s growth. Healthcare leads with AI-powered imaging, wearable health monitors, and robotic assistants, which together are improving patient care while cutting costs.

More than half of AI investments in enterprise healthcare involve multi-sensory AI technologies like speech recognition and sensor fusion. Other sectors, including automotive and manufacturing, benefit as these AI systems can better analyze equipment conditions, predict failures, and automate complex operations by fusing visual, auditory, and textual data streams. This widening adoption confirms multi-sensory AI as a key driver for smarter, more context-aware AI applications across the board.

SWOT Analysis

Strengths

- Rapid advancements in machine learning, sensor fusion, and edge computing are creating highly adaptive and context-aware systems.

- Integration of multiple sensory inputs, such as vision, sound, and touch enhances accuracy and real-time decision-making.

- Strong R&D investments from global tech and automotive leaders strengthen innovation capabilities.

- Companies like NVIDIA, Sony, and Intel are developing AI chips and perception systems for autonomous vehicles and industrial automation.

Weaknesses

- High implementation costs and technical complexity limit large-scale adoption, especially for SMEs.

- Extensive data collection, sensor calibration, and model training increase operational time and expenses.

- Lack of skilled professionals and infrastructure readiness slows deployment.

- Privacy and regulatory compliance challenges hinder faster adoption in sensitive sectors like healthcare and automotive.

Opportunities

- Expanding use in healthcare, autonomous vehicles, smart homes, and AR/VR entertainment creates strong growth potential.

- Rising demand for emotion-aware, human-interactive AI opens new business avenues.

- Edge-AI and 5G enable faster, localized processing and reduce reliance on cloud infrastructure.

- Government funding in the US, Japan, and Europe is supporting startups and innovation in sensory AI technologies.

Threats

- Data privacy, consent, and ethical concerns remain major risks in multi-modal sensing applications.

- Cybersecurity threats in interconnected sensor networks can cause data breaches or manipulation.

- Rapid technological evolution may result in hardware obsolescence and increased costs.

- Competitive intensity and intellectual property disputes among AI developers could slow collaboration and innovation.

Key Players Analysis

The Multi-Sensory AI Market is driven by technology giants such as IBM Corporation, Microsoft, Amazon Web Services Inc., Meta, and NVIDIA. These companies integrate multi-modal AI systems that process visual, auditory, tactile, and environmental sensor data to enable immersive experiences and contextual decision making. Their platforms support applications in autonomous systems, human-machine interaction, smart environments, and robotics, providing scalable intelligence across domains.

Analytical and enterprise-focused firms including Palantir Technologies, Databricks, Nuance Communications, and TechSee specialize in AI frameworks that merge sensor data streams with language models, image recognition, and audio context. Their solutions are used in sectors ranging from healthcare diagnostics and industrial inspection to customer service automation and digital twins. These companies help organizations extract actionable insights from complex multi-sensor datasets.

Specialized hardware and sensory-software companies such as Aryballe Technologies, Immersion Corporation, Advanced Micro Devices Inc. (AMD), Mobileye, and other market contributors focus on enabling multi-sensory perception through tactile feedback, haptics, smell sensors, and advanced vision modules. Their innovations enhance the breadth of AI interaction beyond conventional audiovisual inputs, deepening real-world responsiveness and immersive interface design.

Top Key Players

- Aryballe Technologies

- Databricks

- IBM Corporation

- Immersion Corporation

- Meta

- Microsoft

- Mobileye

- Nuance Communications

- NVIDIA

- Palantir Technologies

- TechSee

- Advanced Micro Devices Inc.

- Amazon Web Services Inc.

- Others

Recent Developments

- July 2025: At its annual Data + AI Summit, Databricks launched Agent Bricks, a new tool to build AI agents optimized on enterprise data with minimal user input. They also unveiled MLflow 3.0, redesigned for generative AI, enabling better monitoring of AI agents across cloud environments.

- January 2025: Meta pushed forward with mixed reality products like the Quest 3S and previewed AI-powered glasses under the Orion project for true augmented reality experiences. Meta enhanced AI assistants in glasses to be proactive and context-aware, aiming to integrate AI deeply into daily digital interactions and the metaverse.

Report Scope

Report Features Description Market Value (2024) USD 13.6 Bn Forecast Revenue (2034) USD 201.3 Bn CAGR(2025-2034) 30.9% Base Year for Estimation 2024 Historic Period 2020-2023 Forecast Period 2025-2034 Report Coverage Revenue forecast, AI impact on Market trends, Share Insights, Company ranking, competitive landscape, Recent Developments, Market Dynamics and Emerging Trends Segments Covered By Technology (Natural Language Processing (NLP), Computer Vision, Speech Recognition, Machine Learning & Deep Learning, Sensor Fusion), By Deployment Mode (Cloud-Based, Edge Computing, On-Premises), By Application (Healthcare, Automotive, Retail, Entertainment, Smart Homes, Others), By End User (Consumer Electronics, Enterprises, Healthcare Providers, Automotive Manufacturers, Retailers, Others) Regional Analysis North America – US, Canada; Europe – Germany, France, The UK, Spain, Italy, Russia, Netherlands, Rest of Europe; Asia Pacific – China, Japan, South Korea, India, New Zealand, Singapore, Thailand, Vietnam, Rest of Latin America; Latin America – Brazil, Mexico, Rest of Latin America; Middle East & Africa – South Africa, Saudi Arabia, UAE, Rest of MEA Competitive Landscape Aryballe Technologies, Databricks, IBM Corporation, Immersion Corporation, Meta, Microsoft, Mobileye, Nuance Communications, NVIDIA, Palantir Technologies, TechSee, Advanced Micro Devices Inc., Amazon Web Services Inc., Others Customization Scope Customization for segments, region/country-level will be provided. Moreover, additional customization can be done based on the requirements. Purchase Options We have three license to opt for: Single User License, Multi-User License (Up to 5 Users), Corporate Use License (Unlimited User and Printable PDF)

-

-

- Aryballe Technologies

- Databricks

- IBM Corporation

- Immersion Corporation

- Meta

- Microsoft

- Mobileye

- Nuance Communications

- NVIDIA

- Palantir Technologies

- TechSee

- Advanced Micro Devices Inc.

- Amazon Web Services Inc.

- Others